For my Raspberry Pi project in the garden, I need a remote shell. However, I encountered some issues; firstly, port forwarding doesn’t work as the LTE provider restricts this capability. Despite several attempts, not even ICMP packets are routed through.

Consequently, I explored dataplicity.com as an alternative, which worked, but it presented problems during disconnects or when my computer entered standby mode.

Therefore, I plan to test Teleport Community on AWS.

Without Terraform

basically thats all what you need (without terraform).

replace the email and cluster name with your values.

#!/bin/bash

sudo yum update -y

sudo yum install -y wget jq

sudo wget https://goteleport.com/static/install.sh

sudo bash install.sh

sudo teleport configure -o file \

--acme --acme-email=<n a m e>@megadodo.org \

--cluster-name=teleport.project.megadodo.org # please replace it with your clustername

sudo systemctl enable teleport

sudo systemctl start teleport

sudo tctl users add teleport-admin --roles=editor,access --logins=root,ubuntu,ec2-user \

| sudo tee -a /root/teleport_invite_url

echo "User 'teleport-admin' has been created. Share this URL with the user to complete user setup:"

cat /root/teleport_invite_urlWith Terraform

To set up a teleport host, I have considered the following steps.

- EC2 instance ( t3.micro ) with Amazon Linux 2023

- SecurityGroup ( teleport-sg )

- Hosted Zone ( like project.megadodo.org ) in AWS ( not necessary needed, you can do it with an HOST A or CNAME )

- DNS A Record: teleport.project.megadodo.org => Public IP

- local terraform backend ( i know its not best practice, but who cares in a dev stage )

The Folderstructure looks like this, marked the most important files

┌──(user㉿host)-[~/github/terraform/teleport-server]

└─$ ls -la

total 192

drwxr-xr-x@ 13 user staff 416 24 Jan 23:10 .

drwxr-xr-x@ 17 user staff 544 24 Jan 22:42 ..

drwxr-xr-x@ 3 user staff 96 24 Jan 20:52 .terraform

-rw-r--r--@ 1 user staff 3467 24 Jan 21:18 .terraform.lock.hcl

-rw-r--r--@ 1 user staff 78 24 Jan 21:42 README.md

-rw-r--r--@ 1 user staff 47 24 Jan 22:44 provider.tf

-rw-r--r--@ 1 user staff 431 24 Jan 22:43 route53.tf

-rw-r--r--@ 1 user staff 957 24 Jan 22:48 securitygroup.tf

-rw-r--r--@ 1 user staff 447 24 Jan 21:16 ssh_key.tf

-rw-r--r--@ 1 user staff 877 24 Jan 23:09 teleport_server.tf

-rw-r--r--@ 1 user staff 32015 24 Jan 23:06 terraform.tfstate

-rw-r--r--@ 1 user staff 987 24 Jan 22:45 variables.tf

provider.tf

provider "aws" {

region = var.aws_region

}Route53

If you need a route53 Hosted Zone use the script as it is

if not comment out the highlighted lines exept line 12 and replace YourAWSHostedZoneID with a ID of your AWS Hosted zone

# if you do not need a hosted zone please comment this out or rename/delete the file

resource "aws_route53_zone" "hosted_zone" {

name = var.hosted_zone_name

tags = {

Terraform = "true"

Environment = "production"

}

}

resource "aws_route53_record" "teleport_entry" {

zone_id = aws_route53_zone.hosted_zone.id

# zone_id = "YourAWSHostedZoneID"

name = "teleport"

type = "A"

ttl = 60

records = [aws_instance.teleport_instance.public_ip]

}

output "zone_id" {

value = aws_route53_zone.hosted_zone.id

}zone_id = "YourAWSHostedZoneID"SecurityGroup

Create security groups in securitygroup.tf

after the complete setup of the teleport admin user you can remove the ingress for port 22

resource "aws_security_group" "teleport_sg" {

name = "teleport-sg"

description = "Security group for Teleport"

}

resource "aws_security_group_rule" "teleport_sg_ingress_https" {

security_group_id = aws_security_group.teleport_sg.id

type = "ingress"

from_port = 443

to_port = 443

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

resource "aws_security_group_rule" "teleport_sg_ingress_ssh" {

security_group_id = aws_security_group.teleport_sg.id

type = "ingress"

from_port = 22

to_port = 22

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

# allow any outgoing traffic

resource "aws_security_group_rule" "teleport_sg_egress_any" {

security_group_id = aws_security_group.teleport_sg.id

type = "egress"

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}SSH Access

Use of already existing ssh-key from my computer

if you have none you can generate your key with

ssh-keygen -P "" -t rsa -b 4096 -m pem -f ssh_key_ec2.pemresource "tls_private_key" "ec2_ssh_key" {

algorithm = "RSA"

rsa_bits = 4096

}

resource "aws_key_pair" "generated_key" {

key_name = var.key_name

public_key = tls_private_key.ec2_ssh_key.public_key_openssh

}

resource "local_sensitive_file" "pem_file" {

filename = pathexpand("~/.ssh/${var.key_name}.pem")

file_permission = "600"

directory_permission = "700"

content = tls_private_key.ec2_ssh_key.private_key_pem

}Teleport EC2 Instance

Creation of the Server itself, here also the userdata.sh will be automatically executed

please note you can specify the user names that should be available for selection in teleport by default.

in my case these are

root,ubuntu,ec2-user

the teleport version to be installed is also passed here

resource "aws_instance" "teleport_instance" {

ami = var.ami_id

instance_type = var.instance_type

key_name = aws_key_pair.generated_key.key_name

vpc_security_group_ids = [aws_security_group.teleport_sg.id]

tags = {

Name = "teleport-instance"

}

user_data = <<-EOF

#!/bin/bash

sudo yum update -y

curl https://goteleport.com/static/install.sh | sudo bash -s 14.3.3

sudo teleport configure -o file \

--acme --acme-email=${var.acme_email} \

--cluster-name=${var.cluster_name}

sudo systemctl enable teleport

sudo systemctl start teleport

# wait for service to be ready

sleep 10

sudo tctl users add teleport-admin --roles=editor,access --logins=root,ubuntu,ec2-user | sudo tee -a /root/teleport_invite_url

EOF

}Environment Variables

specify your environment

variable "aws_region" {

description = "AWS region where resources will be created"

default = "eu-north-1" # Replace with your desired default region

}

variable "ami_id" {

description = "AMI ID for the Teleport-compatible image"

# default = "ami-0506d6d51f1916a96"

default = "ami-0d0b75c8c47ed0edf" # Amazon Linux 2023 if needed Replace with the appropriate Teleport-compatible AMI

}

variable "key_name" {

description = "Name of the AWS key pair"

default = "ssh_key_ec2" # Replace with your key pair name

}

variable "instance_type" {

description = "EC2 instance type"

default = "t3.micro" # Adjust instance type as needed

}

variable "acme_email" {

description = "Email address for ACME (Let's Encrypt) certificate"

default = "<n a m e>@megadodo" # Replace with your email address

}

variable "cluster_name" {

description = "Cluster name for Teleport"

default = "teleport.project.megadodo.org" # Replace with your desired cluster name

}

# Rename route53.tf to route53.tf_ if you dont need that

variable "hosted_zone_name" {

description = "Name for Hosted Zone"

default = "project.megadodo.org # Replace with your desired cluster name

}

Terraform init

Initializing the local backend

┌──(user㉿host)-[/home/user/teleport-server]

└─$ terraform init

Initializing the backend...

Initializing provider plugins...

- Finding latest version of hashicorp/aws...

- Finding latest version of hashicorp/local...

- Finding latest version of hashicorp/tls...

- Installing hashicorp/aws v5.33.0...

- Installed hashicorp/aws v5.33.0 (signed by HashiCorp)

- Installing hashicorp/local v2.4.1...

- Installed hashicorp/local v2.4.1 (signed by HashiCorp)

- Installing hashicorp/tls v4.0.5...

- Installed hashicorp/tls v4.0.5 (signed by HashiCorp)

Terraform has created a lock file .terraform.lock.hcl to record the provider

selections it made above. Include this file in your version control repository

so that Terraform can guarantee to make the same selections by default when

you run "terraform init" in the future.

Terraform has been successfully initialized!

You may now begin working with Terraform. Try running "terraform plan" to see

any changes that are required for your infrastructure. All Terraform commands

should now work.

If you ever set or change modules or backend configuration for Terraform,

rerun this command to reinitialize your working directory. If you forget, other

commands will detect it and remind you to do so if necessary.

Terraform plan

Build and execute the terraform plan inside your terraform folder

terraform plan -out teleport.plan

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# aws_instance.teleport_instance will be created

+ resource "aws_instance" "teleport_instance" {

+ ami = "ami-0d0b75c8c47ed0edf"

+ arn = (known after apply)

+ associate_public_ip_address = (known after apply)

------snip ----

----- snap ----

+ public_key_pem = (known after apply)

+ rsa_bits = 4096

}

Plan: 10 to add, 0 to change, 0 to destroy.

Changes to Outputs:

+ zone_id = (known after apply)

───────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

Saved the plan to: teleport.plan

To perform exactly these actions, run the following command to apply:

terraform apply "teleport.plan"

┌──(user㉿host)-[~/github/terraform/teleport-server]

└─$ terraform apply teleport.plan

tls_private_key.ec2_ssh_key: Creating...

aws_route53_zone.hosted_zone: Creating...

aws_security_group.teleport_sg: Creating...

aws_security_group.teleport_sg: Creation complete after 2s [id=sg-0caf87b3c388a63b2]

aws_security_group_rule.teleport_sg_egress_any: Creating...

aws_security_group_rule.teleport_sg_ingress_https: Creating...

aws_security_group_rule.teleport_sg_ingress_ssh: Creating...

aws_security_group_rule.teleport_sg_egress_any: Creation complete after 0s [id=sgrule-551642377]

aws_security_group_rule.teleport_sg_ingress_ssh: Creation complete after 1s [id=sgrule-2538702532]

aws_security_group_rule.teleport_sg_ingress_https: Creation complete after 1s [id=sgrule-2164654274]

tls_private_key.ec2_ssh_key: Creation complete after 7s [id=379bc61f20467cfc2a424f3919b8a6184c73c23a]

aws_key_pair.generated_key: Creating...

local_sensitive_file.pem_file: Creating...

local_sensitive_file.pem_file: Creation complete after 0s [id=6da0bc4a8e1d3dec4ca11c836cf377c8281c1804]

aws_key_pair.generated_key: Creation complete after 0s [id=ssh_key_ec2]

aws_instance.teleport_instance: Creating...

aws_route53_zone.hosted_zone: Still creating... [10s elapsed]

aws_instance.teleport_instance: Still creating... [10s elapsed]

aws_instance.teleport_instance: Creation complete after 13s [id=i-0c3481035c328c0f8]

aws_route53_zone.hosted_zone: Still creating... [20s elapsed]

aws_route53_zone.hosted_zone: Still creating... [30s elapsed]

aws_route53_zone.hosted_zone: Still creating... [40s elapsed]

aws_route53_zone.hosted_zone: Still creating... [50s elapsed]

aws_route53_zone.hosted_zone: Creation complete after 51s [id=Z02168653GXSKLJCUVVL0]

aws_route53_record.teleport_entry: Creating...

aws_route53_record.teleport_entry: Still creating... [10s elapsed]

aws_route53_record.teleport_entry: Still creating... [20s elapsed]

aws_route53_record.teleport_entry: Still creating... [30s elapsed]

aws_route53_record.teleport_entry: Still creating... [40s elapsed]

aws_route53_record.teleport_entry: Still creating... [50s elapsed]

aws_route53_record.teleport_entry: Still creating... [1m0s elapsed]

aws_route53_record.teleport_entry: Creation complete after 1m5s [id=Z02168653GXSKLJCUVVL0_teleport_A]

Apply complete! Resources: 10 added, 0 changed, 0 destroyed.

Outputs:

zone_id = "Z02168653GXSKLJCUVVL0"Terraform apply

after creating a plan execute terraform apply to create the environmen in AWS

$ terraform apply teleport.plan

tls_private_key.ec2_ssh_key: Creating...

aws_route53_zone.hosted_zone: Creating...

aws_security_group.teleport_sg: Creating...

aws_security_group.teleport_sg: Creation complete after 2s [id=sg-0caf87b3c388a63b2]

aws_security_group_rule.teleport_sg_egress_any: Creating...

aws_security_group_rule.teleport_sg_ingress_https: Creating...

aws_security_group_rule.teleport_sg_ingress_ssh: Creating...

aws_security_group_rule.teleport_sg_egress_any: Creation complete after 0s [id=sgrule-551642377]

aws_security_group_rule.teleport_sg_ingress_ssh: Creation complete after 1s [id=sgrule-2538702532]

aws_security_group_rule.teleport_sg_ingress_https: Creation complete after 1s [id=sgrule-2164654274]

tls_private_key.ec2_ssh_key: Creation complete after 7s [id=379bc61f20467cfc2a424f3919b8a6184c73c23a]

aws_key_pair.generated_key: Creating...

local_sensitive_file.pem_file: Creating...

local_sensitive_file.pem_file: Creation complete after 0s [id=6da0bc4a8e1d3dec4ca11c836cf377c8281c1804]

aws_key_pair.generated_key: Creation complete after 0s [id=ssh_key_ec2]

aws_instance.teleport_instance: Creating...

aws_route53_zone.hosted_zone: Still creating... [10s elapsed]

aws_instance.teleport_instance: Still creating... [10s elapsed]

aws_instance.teleport_instance: Creation complete after 13s [id=i-0c3481035c328c0f8]

aws_route53_zone.hosted_zone: Still creating... [20s elapsed]

aws_route53_zone.hosted_zone: Still creating... [30s elapsed]

aws_route53_zone.hosted_zone: Still creating... [40s elapsed]

aws_route53_zone.hosted_zone: Still creating... [50s elapsed]

aws_route53_zone.hosted_zone: Creation complete after 51s [id=Z02168653GXSKLJCUVVL0]

aws_route53_record.teleport_entry: Creating...

aws_route53_record.teleport_entry: Still creating... [10s elapsed]

aws_route53_record.teleport_entry: Still creating... [20s elapsed]

aws_route53_record.teleport_entry: Still creating... [30s elapsed]

aws_route53_record.teleport_entry: Still creating... [40s elapsed]

aws_route53_record.teleport_entry: Still creating... [50s elapsed]

aws_route53_record.teleport_entry: Still creating... [1m0s elapsed]

aws_route53_record.teleport_entry: Creation complete after 1m5s [id=Z02168653GXSKLJCUVVL0_teleport_A]

Apply complete! Resources: 10 added, 0 changed, 0 destroyed.

Outputs:

zone_id = "Z02168653GXSKLJCUVVL0"Retrieve invite URL to setup the Cluster

Connection test to AWS instance

└─$ ssh teleport.project.megadodo.org -l ec2-user -i ~/.ssh/ssh_key_ec2.pem

The authenticity of host 'teleport.project.megadodo.org (13.60.24.255)' can't be established.

ED25519 key fingerprint is SHA256:zt5YVZ+omhMh5lRxMtweBOUXf6SBuSTFkFUsZmCODt4.

This key is not known by any other names.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Warning: Permanently added 'teleport.project.megadodo.org' (ED25519) to the list of known hosts.

, #_

~\_ ####_ Amazon Linux 2023

~~ \_#####\

~~ \###|

~~ \#/ ___ https://aws.amazon.com/linux/amazon-linux-2023

~~ V~' '->

~~~ /

~~._. _/

_/ _/

_/m/'

[ec2-user@ip-172-31-38-168 ~]$fget Teleport Invite URL

[ec2-user@ip-172-31-38-168 ~]$ sudo cat /root/teleport_invite_url

User "teleport-admin" has been created but requires a password. Share this URL with the user to complete user setup, link is valid for 1h:

https://teleport.project.megadodo.org:443/web/invite/e45d516d05e5b2eed43cc34d7ddd89d53456fef73

NOTE: Make sure teleport.project.megadodo.org:443 points at a Teleport proxy which users can access.

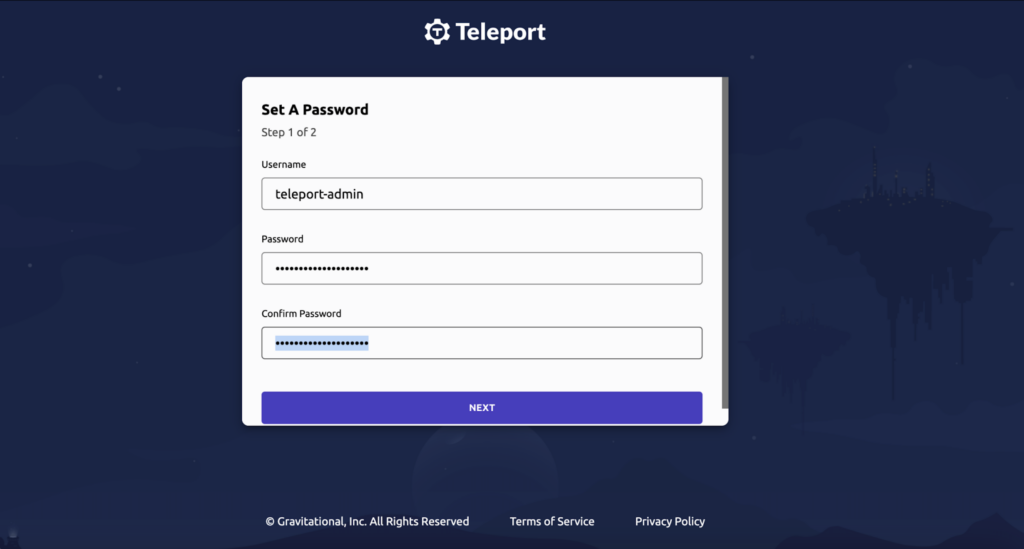

copy the link and paste it into your browser, by visiting the link you can set a password for teleport.

if you do not receive a link you just need to execute which outputs again

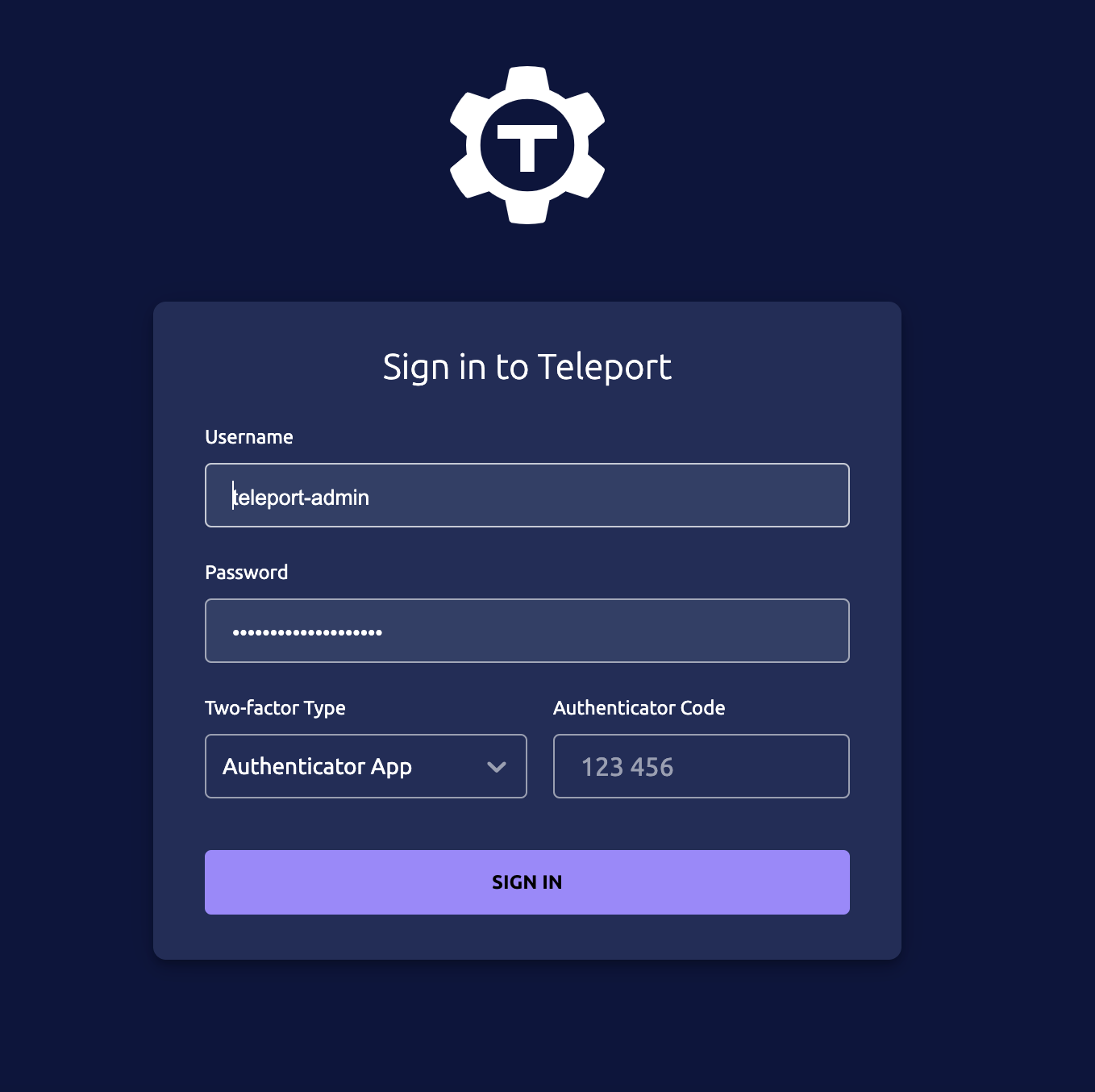

sudo tctl users add teleport-admin --roles=editor,access --logins=root,ubuntu,ec2-userPage for the initial setup of the teleport

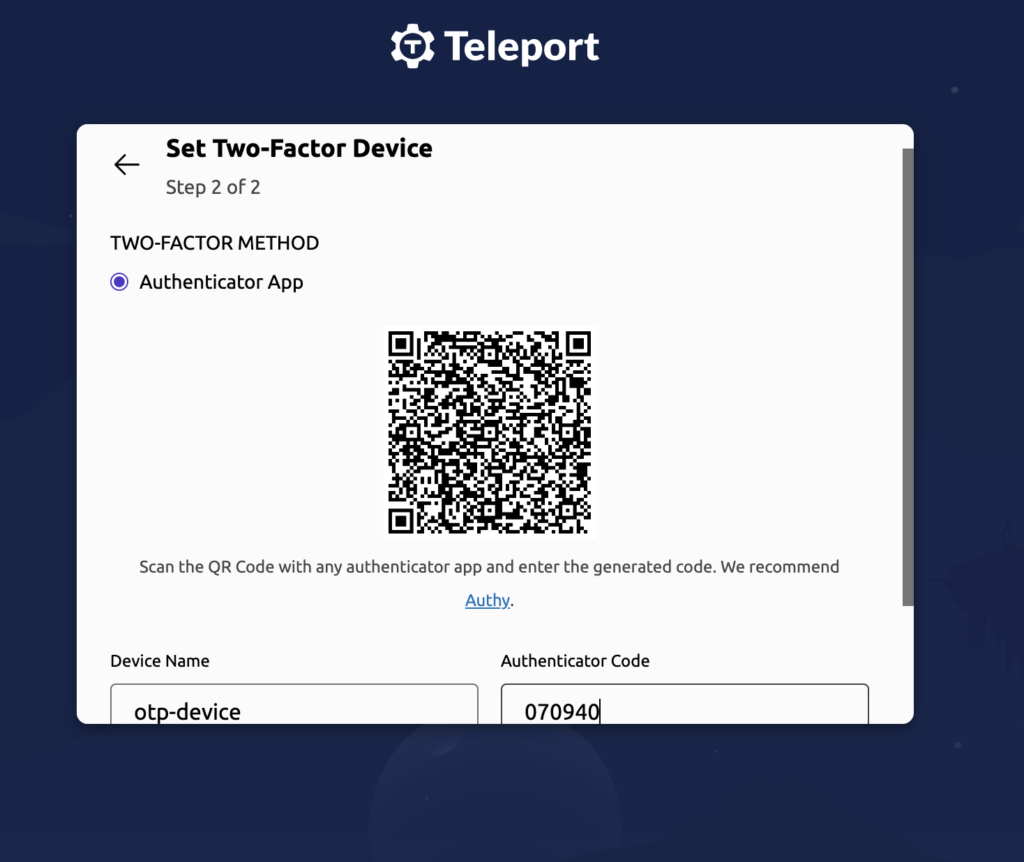

MFA

Two-Factor is necessary by default, which is good

That’s almost it. 🙂

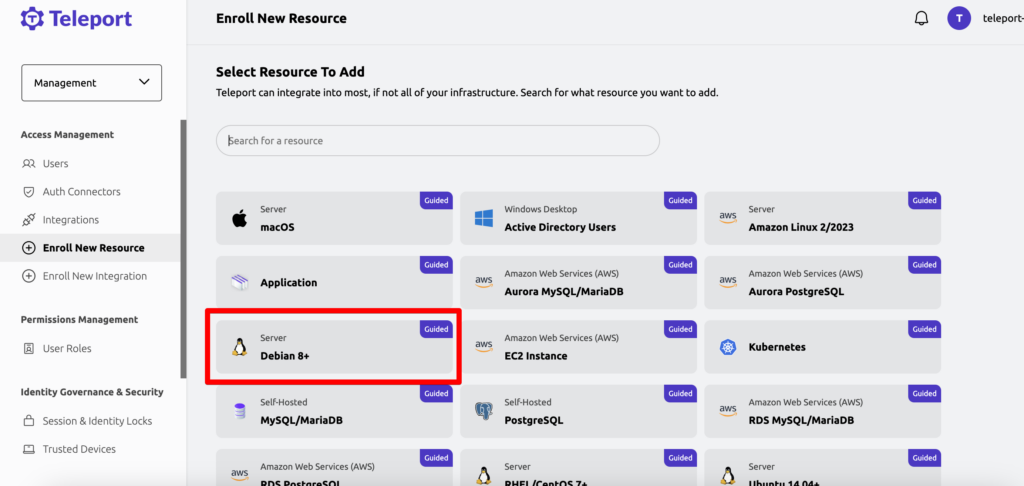

Enroll New Resource ( raspi )

Now we need to add a resource to teleport, in my case it is a Debian 8+ (Raspberry). Copy the command you receive and run it on the remote machine you want to access

Copied and executed the url which is given in when you click on this on my Raspberry

note: The package has a size of 135MB for Debian, which is difficult for my limited LTE data volume.

root@rpi-garden:~# sudo bash -c "$(curl -fsSL https://teleport.project.megadodo.org/scripts/e45d516d05e5b2eed43cc34d7ddd89d53/install-node.sh)"

sudo: unable to resolve host rpi-garden: Name or service not known

2024-01-25 00:47:23 CET [teleport-installer] TELEPORT_VERSION: 14.3.3

2024-01-25 00:47:23 CET [teleport-installer] TARGET_HOSTNAME: teleport.project.megadodo.org

2024-01-25 00:47:23 CET [teleport-installer] TARGET_PORT: 443

2024-01-25 00:47:23 CET [teleport-installer] JOIN_TOKEN: 4f511f47e5b2eef33ccc4aafdd89fb7f

2024-01-25 00:47:23 CET [teleport-installer] CA_PIN_HASHES: sha256:9afac743c5fd1d236667436423a2f929fexd9253feed159af622d6541d65b592e

2024-01-25 00:47:23 CET [teleport-installer] Checking TCP connectivity to Teleport server (teleport.project.megadodo.org:443)

2024-01-25 00:47:23 CET [teleport-installer] Connectivity to Teleport server (via nc) looks good

2024-01-25 00:47:23 CET [teleport-installer] Detected host: linux-gnueabihf, using Teleport binary type linux

2024-01-25 00:47:23 CET [teleport-installer] Detected arch: armv7l, using Teleport arch arm

2024-01-25 00:47:23 CET [teleport-installer] Detected distro type: debian

2024-01-25 00:47:23 CET [teleport-installer] Using Teleport distribution: deb

2024-01-25 00:47:23 CET [teleport-installer] Created temp dir /tmp/teleport-w80NLClm8b

2024-01-25 00:47:23 CET [teleport-installer] Installing from binary file.

2024-01-25 00:47:24 CET [teleport-installer] Downloading Teleport deb release 14.3.3

2024-01-25 00:47:24 CET [teleport-installer] Running curl -fsSL --retry 5 --retry-delay 5 https://get.gravitational.com/teleport_14.3.3_arm.deb

2024-01-25 00:47:24 CET [teleport-installer] Downloading to /tmp/teleport-w80NLClm8b/teleport_14.3.3_arm.deb

2024-01-25 00:48:01 CET [teleport-installer] Downloaded file size: 141283394 bytes

2024-01-25 00:48:01 CET [teleport-installer] Will use shasum -a 256 to validate the checksum of the downloaded file

2024-01-25 00:48:08 CET [teleport-installer] The downloaded file's checksum validated correctly

2024-01-25 00:48:08 CET [teleport-installer] Using dpkg to install /tmp/teleport-w80NLClm8b/teleport_14.3.3_arm.deb

Selecting previously unselected package teleport.

(Reading database ... 165895 files and directories currently installed.)

Preparing to unpack .../teleport_14.3.3_arm.deb ...

Unpacking teleport (14.3.3) ...

Setting up teleport (14.3.3) ...

2024-01-25 00:49:04 CET [teleport-installer] Found: Teleport v14.3.3 git:v14.3.3-0-g542fbb0 go1.21.6

2024-01-25 00:49:04 CET [teleport-installer] Writing Teleport node service config to /etc/teleport.yaml

A Teleport configuration file has been created at "/etc/teleport.yaml".

To start Teleport with this configuration file, run:

teleport start --config="/etc/teleport.yaml"

Happy Teleporting!

2024-01-25 00:49:04 CET [teleport-installer] Host is using systemd

2024-01-25 00:49:05 CET [teleport-installer] Starting Teleport via systemd. It will automatically be started whenever the system reboots.

Teleport has been started.

View its status with 'sudo systemctl status teleport.service'

View Teleport logs using 'sudo journalctl -u teleport.service'

To stop Teleport, run 'sudo systemctl stop teleport.service'

To start Teleport again if you stop it, run 'sudo systemctl start teleport.service'

You can see this node connected in the Teleport web UI or 'tsh ls' with the name 'rpi-garden'

Find more details on how to use Teleport here: https://goteleport.com/docs/user-manual/

root@rpi-garden:~# Service is enabled an running per default

root@rpi-garden:~# systemctl status teleport.service

● teleport.service - Teleport Service

Loaded: loaded (/lib/systemd/system/teleport.service; enabled; preset: enabled)

Active: active (running) since Thu 2024-01-25 00:49:10 CET; 2min 37s ago

Main PID: 2432 (teleport)

Tasks: 10 (limit: 1559)

CPU: 19.515s

CGroup: /system.slice/teleport.service

└─2432 /usr/local/bin/teleport start --config /etc/teleport.yaml --pid-file=/run/teleport.pid

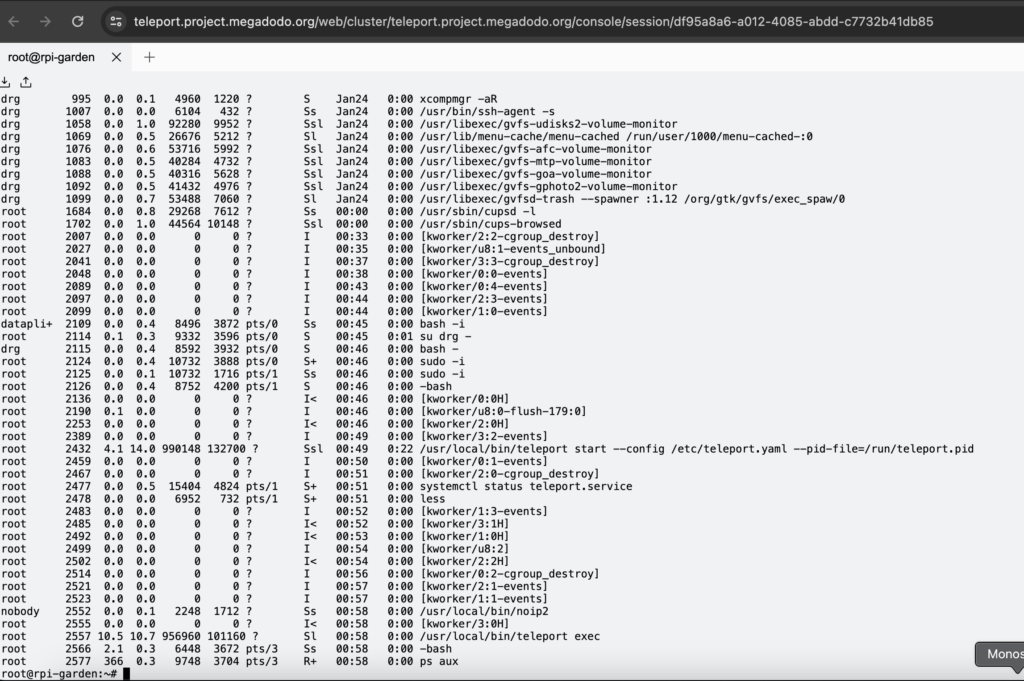

Yehaa it works !!!

but i still miss the Wormhole which Dataplicity provides

“Wormhole takes a website running on localhost port 80 and makes it available at the provided URL”

At the other hand teleport has playback functionalities where you can see what you typed days ago 😉 also the session Management looks a tiny bit better that Dataplicity

Terraform Scripts download

Terraform scripts can be found here

https://github.com/danielgohlke/terraform-teleport-server

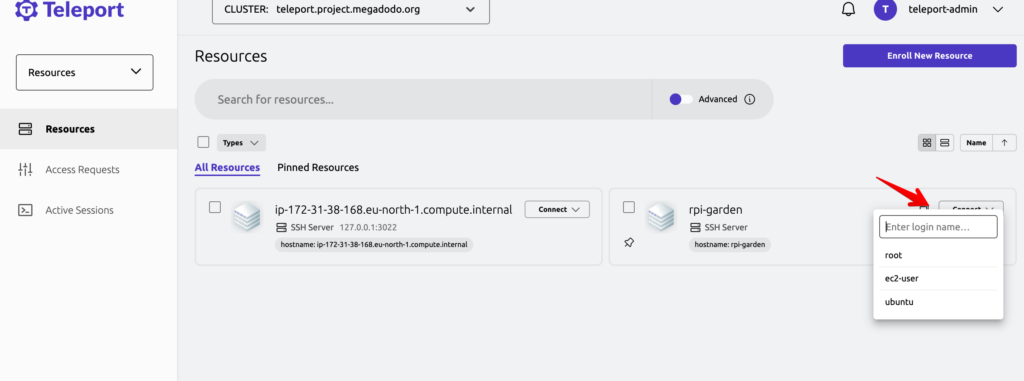

Update Host Users

if you want more or less users you can update the users via the teleport cli

existing users will be overwritten

in this case only ec2-user and ubuntu is available for the teleport-admin

tctl users update teleport-admin --set-logins=ec2-user,ubuntu

User teleport-admin has been updated:

New logins: ec2-user,ubuntu